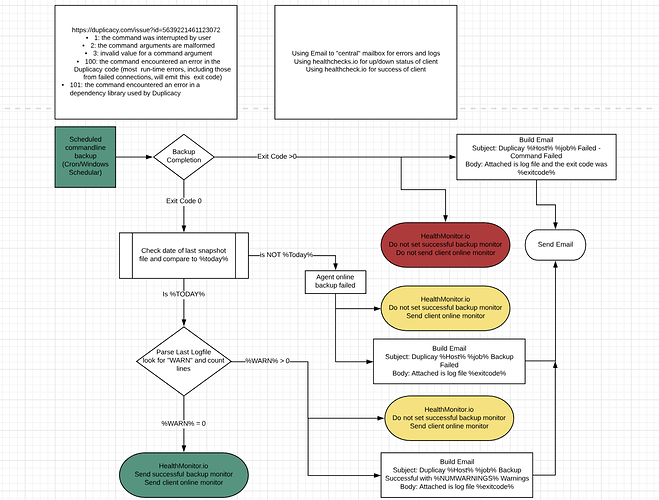

Maybe I am mistaken but i’ve observed a few conditions:

ERROR when File should be accessible but is not

WARN when a file simply is not accessible

And backup completes with snapshot when WARN condition occurs… See the below from my logs

01:25:27.848 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Application Data: Access is denied.

01:25:27.849 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\###\Cookies: Access is denied.

01:25:27.849 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Local Settings: Access is denied.

01:25:27.850 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\My Documents: Access is denied.

01:25:27.850 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\###\NetHood: Access is denied.

01:25:27.850 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\PrintHood: Access is denied.

01:25:27.851 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Recent: Access is denied.

01:25:27.851 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\SendTo: Access is denied.

01:25:27.851 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Start Menu: Access is denied.

01:25:27.852 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Templates: Access is denied.

01:25:29.635 Failed to read the symlink AppData/Local/Packages/#########_b6e429xa66pga/LocalCache: Unhandled reparse point type 80000018

01:25:29.863 Failed to read the symlink AppData/Local/Packages/Microsoft.Microsoft3DViewer_8wekyb3d8bbwe/LocalCache: Unhandled reparse point type 80000018

<snip>

1:29:08.712 Backup for C:\Users\#### at revision 41 completed

01:29:08.712 Files: 64863 total, 38,638M bytes; 6544 new, 1,690M bytes

01:29:08.712 File chunks: 8074 total, 40,886M bytes; 114 new, 663,321K bytes, 322,280K bytes uploaded

01:29:08.712 Metadata chunks: 9 total, 21,866K bytes; 9 new, 21,866K bytes, 7,410K bytes uploaded

01:29:08.712 All chunks: 8083 total, 40,907M bytes; 123 new, 685,188K bytes, 329,690K bytes uploaded

01:29:08.712 Total running time: 00:03:47

01:29:08.712 12 files were not included due to access errors

01:29:08.789 The shadow copy has been successfully deleted

Now, when I look at those specifically, they all are not really an issue for me and they should be excluded;however , it does tell me that there are “Failed” conditions that the backup engine accepts as “WARN” conditions in the logs and completes the backup.

or am I missing something obvious?

Thanks!

so it always came down to me bugging them anyway.

so it always came down to me bugging them anyway.