Another user on github.com suggested that using https://healthchecks.io/ to detect failed backups. You can even write a Pre Command and Post Command Scripts to contact healthchecks.io when the backup is successful.

Using https://healthchecks.io is interesting, I wasn’t aware of that. They have had an outage, though. Not sure how I feel about a “one-man show”.

The below is for Arq Backup (I’m migrating from Arq to Duplicacy so I can pick up Azure as a target). I’ll run this until I complete my conversion. Once my conversion is completed, I’ll make trivial changes for Duplicacy once I decide what my scripting will generate.

To answer your question, the date/time of the generated E-Mail from Arq (sent when a backup job completes) serves as the backup date (the “ping”, if you will, that the backup ran). If it has “(0 errors)” in the subject, then a Gmail filter puts it in “backup-logs” and removes it from Inbox. I never see it unless I look for it. If it does not have “(0 errors)”, then it sticks in my inbox. That’s how the filter is set up.

Then I have the following (super simple) code running on Google servers. If it doesn’t see any messages in “backup-logs”, then it generates an E-Mail. If it sees messages, it moves them to trash (deleted in 30 days). You can have as many scripts running, on any schedule, as you’d like. You can customize different scripts for different backup jobs. And you have full control over when the scripts run.

Let me know if you have further questions …

function arqBackup_Office() {

var threads = GmailApp.search('label:"backup-logs"');

var backupHost = "Office-iMac";

var foundBackup = 0;

// Backups from Arq Backup with no errors get filtered to label "backup-logs" via Gmail settings.

// This makes them easy for us to find and iterate over.

//

// Backups are scheduled at least as often as this script runs. Thus, if nothing was run when this

// script runs, then we get active notification that something is wrong with the backup process.

//

// Be aware that Arq Backup must be configured to use the subject messages specified below

// (with backupHost name) in order for this script to capture the messages properly.

for (var i = 0; i < threads.length; i++) {

if (threads[i].getFirstMessageSubject() == "ARQ Backup Results for " + backupHost + " (0 errors)")

{

threads[i].moveToTrash();

foundBackup++;

}

}

if (foundBackup == 0)

{

GmailApp.sendEmail('mail-address@yourdomain.com',

'WARNING: No backup log files received from ARQ Backup on ' + backupHost,

'Please investigate ARQ Backup process, jobs do not appear to be getting scheduled!');

}

}

And here is my server side monitor. It is run from a directory where all my clients back up TO. It spits out to the screen and I just redirect it using >> (Concat) to a log file that I look at periodically. I’d like to incorporate email someday.

#!/bin/bash

function bstatus() {

recent_dir=`ls -t1 | head -1` 2>&1

if [ "$recent_dir" -eq "$recent_dir" ] 2>/dev/null; then

echo \"`date -r $recent_dir`\" " " `du -skh ../..`

else

echo ""

fi

}

echo "******************************************"

echo "Checking backup status at `date`"

echo "******************************************"

cd /share/homes/backups

for user in `find . -maxdepth 1 -type d | tail -n+2`

do

# echo "---" $user

if [ -d $user/snapshots ]; then

cd $user/snapshots

else

continue

fi

for snapshot in `ls` ;

do

echo "________________________________________________________________________________________ "

cd $snapshot

printf "%-50s ||||| %-30s \n " $snapshot "`bstatus`"

cd ..

done

cd ../..

done

Jeffaco, Thank you. In Duplicacy I dont see how to emulate as you’ve setup with Arq where Arq will list the number of errors in the email subject. I’ve searched around for a %STATUS% or similar type of variable that I can put into the Duplicacy configuration gui for email.

Kevinvinv, Thank you as well. It’s a nice and simple approach; however, if I am reading this right, the ‘bstatus’ you are pulling is the date of the snapshot, are you able to see if a snapshot was successfully completed, but completed with errors?

gchen, this solution is interesting as well for me. the email method is only a “ping” so either, it received and email or not and thus i could tell if a client goes offline, but not if it is online but the backup had errors.

I could see myself successfully using curl and their URL ‘ping’ with two monitors per client (client online and Backup status)

Do you have documentation on pre and post scripts for Windows clients?

Is there variables that can be included in emails or fed to pre-post scripts that describe the sucess/errors of a completed backup?

Thanks all.

HI Lance,

My understanding from gchen is that the snapshot file is the last thing to be uploaded and that if it is there- then the backup is complete without error.

I think sometime in the future we will be getting a way to verify the chunk files and sizes present in each snapshot (gchen is working on something in this regard) from the server side - you can already do this from the client side but it might take awhile depending on connection etc.

All the best!!

Hi Lance,

You asked:

Jeffaco, Thank you. In Duplicacy I dont see how to emulate as you've setup with Arq where Arq

will list the number of errors in the email subject. I've searched around for a %STATUS% or

similar type of variable that I can put into the Duplicacy configuration gui for email.

Keep in mind that you can search the body of messages as well for text (ERROR: or whatever).

In my case, I’m working on scripting to drive duplicacy from Mac OS/X. When using scripting, I can just look at the resulting status code ($? on Mac/Linux) to see if there were errors or not. No, I don’t get an error count, but I do get the fact that an error occurred, and I can use that to construct a subject line that’s trivially filtered by Gmail.

Also, if you want to use the GUI, I’ve found Gang to be awesome about enhancement requests. Ask for %STATUS% to be added to the GUI, and I suspect you’d find it soon enough.

Hope this helps.

Lance, if a backup fails with any error, it won’t run the post-backup script, so you’ll know something is wrong.

I’m all for feedback so please comment/correct where I’m wrong. (I am new to Duplicacy)

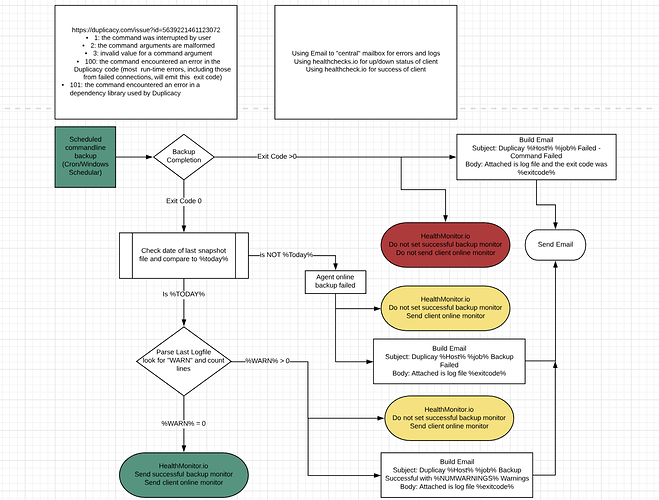

So, in essence to backup my family and have decent monitoring in place I’ve come to the conclusion that the Duplicacy GUI is not sufficiant. As such I think I can do what I want with the command line version and a small program around it.

Please take a look at the flow if you dont mind and give me your feedback:

https://www.lucidchart.com/documents/view/8d385416-1dc1-46e0-b6f7-ef08fe930aa8/0

I’d probably code this in something cross platform like python with a .yaml file for all the variables, but I think this would do what I want…

I’m not quite clear on why you’d want to check the date of last snapshot file.

If you get a success code (exit 0) from duplicacy, what situations would there be where the backup didn’t actually succeed? And if such cases do exist, wouldn’t that be a bug?

Maybe I am mistaken but i’ve observed a few conditions:

ERROR when File should be accessible but is not

WARN when a file simply is not accessible

And backup completes with snapshot when WARN condition occurs… See the below from my logs

01:25:27.848 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Application Data: Access is denied.

01:25:27.849 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\###\Cookies: Access is denied.

01:25:27.849 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Local Settings: Access is denied.

01:25:27.850 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\My Documents: Access is denied.

01:25:27.850 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\###\NetHood: Access is denied.

01:25:27.850 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\PrintHood: Access is denied.

01:25:27.851 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Recent: Access is denied.

01:25:27.851 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\SendTo: Access is denied.

01:25:27.851 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Start Menu: Access is denied.

01:25:27.852 Failed to read the symlink: readlink C:\Users\####\.duplicacy\shadow\Users\####\Templates: Access is denied.

01:25:29.635 Failed to read the symlink AppData/Local/Packages/#########_b6e429xa66pga/LocalCache: Unhandled reparse point type 80000018

01:25:29.863 Failed to read the symlink AppData/Local/Packages/Microsoft.Microsoft3DViewer_8wekyb3d8bbwe/LocalCache: Unhandled reparse point type 80000018

<snip>

1:29:08.712 Backup for C:\Users\#### at revision 41 completed

01:29:08.712 Files: 64863 total, 38,638M bytes; 6544 new, 1,690M bytes

01:29:08.712 File chunks: 8074 total, 40,886M bytes; 114 new, 663,321K bytes, 322,280K bytes uploaded

01:29:08.712 Metadata chunks: 9 total, 21,866K bytes; 9 new, 21,866K bytes, 7,410K bytes uploaded

01:29:08.712 All chunks: 8083 total, 40,907M bytes; 123 new, 685,188K bytes, 329,690K bytes uploaded

01:29:08.712 Total running time: 00:03:47

01:29:08.712 12 files were not included due to access errors

01:29:08.789 The shadow copy has been successfully deleted

Now, when I look at those specifically, they all are not really an issue for me and they should be excluded;however , it does tell me that there are “Failed” conditions that the backup engine accepts as “WARN” conditions in the logs and completes the backup.

or am I missing something obvious?

Thanks!

What is the exit status of this backup? While it finished, was the exit code zero or non-zero?

Honestly, I’m unconvinced that this is an error anyway. After all, if you don’t have access to files that you tried to back up, but everything you did have access to backed up successfully, I’d call this a “good” backup. If you want to flag it for errors, I guess you can (grep for “not include due to access errors”). Personally, I’d just look at the logs from time to time (once every few weeks, maybe), even on success, to trap this sort of thing. Or you might want to include, in the success E-Mail, the last 10 lines of the log or so (making it super trivial to just look at the back log sent via E-Mail).

I back up files as my user account (non-privileged). If I try to files that I don’t have access to, I’d expect this error. I’d rather have this than run with privileges; I always minimize what runs with privileges for obvious reasons.

What is the exit status of this backup? While it finished, was the exit code zero or non-zero?

Honestly, I’m unconvinced that this is an error anyway. After all, if you don’t have access to files that you tried to back up, but everything you did have access to backed up successfully, I’d call this a “good” backup. If you want to flag it for errors, I guess you can (grep for “not include due to access errors”). Personally, I’d just look at the logs from time to time (once every few weeks, maybe), even on success, to trap this sort of thing. Or you might want to include, in the success E-Mail, the last 10 lines of the log or so (making it super trivial to just look at the back log sent via E-Mail).

I back up files as my user account (non-privileged). If I try to files that I don’t have access to, I’d expect this error. I’d rather have this than run with privileges

I dont have the specific exit codes as that was a GUI scheduled backup as a service – based on the published documentation, it should have been 0: Exit codes details

My next step would be to validate this for these types of situations because if the exit code on fully successful was zero, on successful backup with warnings is something like 1, that makes the automation of the monitoring much easier – but also unpublished “features”

While I agree with the general concept of unprivileged, shadow copies and filesystem snapshots are fantastic tools to get consistent backups. For example, if a very large file changes while backing up, without some sort of snapshot tech in place, your backup could end up with 1/2 of the old version and 1/2 of the new version as a single file and not know better – entirely possible duplicacy handles this condition as well, but unknown for me. Other files are almost always locked but are important like .pst files.

In any case, Duplicacy handles the portions that require elevated accounts, while what I am planning on coding is some automation around detection of failures and non-running backups.

For the first MVP, i’d probably do exactly that and simply output the status as email – however, with a goal of a lot more “fire and forget” type of automation such that the emails are reduced to digest type of communications for success and regular for error/warning.

I’ve got a github setup where i’m starting to store my artifacts, but it’s very minimal at the moment. I’ll post back as I capture exit codes and initial code.

I’ve cobbled together some python to launch the command line version of duplicacy and found this (last line is python reporting the exit code of duplicacy)

12 files were not included due to access errors

The shadow copy has been successfully deleted

Command exit status/return code : 0

go.py is my testing code and above is the repo i’ll start developing my wrapper.

Hey Lance,

You may want to take a look at my cross-platform “Go” program to run Duplicacy backups. I need docs (and will work on it if someone needs it next), but I’m using this for my stuff pretty successfully.

It was originally just going to be for Mac OS/X, but I decided in the end to write it in “Go”. Partly this lets me learn “Go”, and partly this gives me portability. I test on Windows but primarily run it on OS/X.

If you have questions, send me E-Mail or post an issue to the repository.

I just got an E-Mail framework going. It has log files, log file rotation, short summaries (printed on screen, will be body of E-Mail message). All of this was written to be completely portable. “Go” is a great language for that, as there is a rich library of packages (both in Go itself and elsewhere).

ooh- I will take a look at this too!

Looks like I could use a tiny bit of help to get started. I too am on OS/X.

Not sure how to begin to try out your code… can you give me a 3-step quick start? Thanks very much!!

Looks like I could use a tiny bit of help to get started. I too am on OS/X.

Not sure how to begin to try out your code… can you give me a 3-step quick start? Thanks very much!!

I had the same need: send myself emails when no backups were received for 3 days, and again after 7 days.

I created a small PHP script that uses the Duplicacy CLI to check all the backups found in specific folders, and use the list command to find the latest snapshot available for each, and send myself an email when it was too old.

If anyone wants to use it, I published it here: https://gist.github.com/gboudreau/91b8866e3adb19965897ecd80a12525b

See instructions at the top of the script.

Example output:

[2018-05-17 20:33] Last backup from Guillaumes-MacBook-Pro-gb-gb was 0 hours (0 days) ago...

[2018-05-17 20:33] OK.

or when it fails:

[2018-05-17 20:45] Last backup from Guillaumes-MacBook-Pro-gb-gb was 1 hours (3 days) ago...

[2018-05-17 20:45] Oh noes! Backup from 'Guillaumes-MacBook-Pro-gb-gb' is missing for more than 3d!!

[2018-05-17 20:45] Sending email notification to you@gmail.com

Comments / questions / Pull Requests are welcome.

Cheers,

- Guillaume

PS Tested on Linux; pretty sure it would work as-is on Mac. On Windows: meh!

Great! I have been trying to put together something like this too… my biggest problem is that my sftp server is a QNAP and QNAP doesnt support any sort of command line mail utility (sendmail etc)… and that is REAL irritating.

PS- I too am a crashplan refugee and trying to get the same features that I am used to from Duplicacy  I now have about 8 people backing up to my basement using it…

I now have about 8 people backing up to my basement using it…