Context: I have a ~7TB backup to an external 12TB drive. There are about 1.5 million files in the duplicacy backup folder. I’ve scheduled a “check” after each backup to refresh the stats in the web GUI.

My problem is, that this check runs for more than 2 hours every day.

I’ve tried to search similar issues in the forum. I’ve found two threads but both of them seems stalled.

- The "check" command is so slow that it can never finish. Is there anything that can be done to improve the speed of "check"?

- Optimizations for large datasets

Based on the logs it looks for just existence of the files for nearly 1 hours. Then it does “nothing” for another hour and finally is spits out the statistics.

Running check command from S:\duplicacy-web/repositories/localhost/all

Options: [-log check -storage WD-12TB -a -tabular]

2023-12-10 22:33:53.649 INFO STORAGE_SET Storage set to B:/Duplicacy

2023-12-10 22:33:53.686 INFO SNAPSHOT_CHECK Listing all chunks

2023-12-10 22:35:45.191 INFO SNAPSHOT_CHECK 2 snapshots and 846 revisions

2023-12-10 22:35:45.232 INFO SNAPSHOT_CHECK Total chunk size is 6779G in 1451881 chunks

2023-12-10 22:35:48.121 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Shared at revision 1 exist

2023-12-10 22:35:50.451 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Shared at revision 6 exist

2023-12-10 22:35:52.894 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Shared at revision 8 exist

2023-12-10 22:35:55.701 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Shared at revision 10 exist

[...]

2023-12-10 23:23:37.343 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Private at revision 550 exist

2023-12-10 23:23:40.290 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Private at revision 551 exist

2023-12-10 23:23:44.112 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Private at revision 552 exist

2023-12-10 23:23:47.036 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Private at revision 553 exist

2023-12-10 23:23:49.848 INFO SNAPSHOT_CHECK All chunks referenced by snapshot Private at revision 554 exist

2023-12-11 00:52:27.107 INFO SNAPSHOT_CHECK

snap | rev | | files | bytes | chunks | bytes | uniq | bytes | new | bytes |

[...]

It takes 2-4 seconds to check each revisions. I don’t really understand why it so slow when every revision contains 99% of the chunks (therefore the same files that were already checked) as the previous revision. Why isn’t there a simple caching logic to store the already checked chunks?

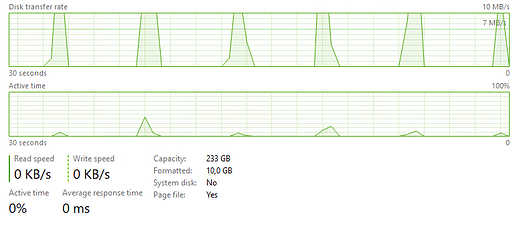

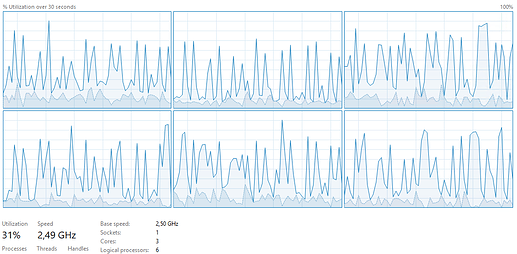

Then it does something for another hour without any trace in the log. What does it do in here? I mean the files are already scanned, it knows their size and everything about them, does it really takes more than an hour to format that data into a table?

Is it possible to do something about this slowness? It doesn’t look right to take 2+ hours on a local drive.

mainline is up to

mainline is up to