@joe, right, the dot is a special CSS selector so #Duplicacy.WasabiChartSize won’t find the graph correctly. I’ll add a check to the storage name to make sure such characters won’t be allowed.

I would like to switch my current license to a new computer running Duplicacy Web Eddition. How do i reveal the Hostname so i can transfer it in my Account?

EDIT

I found the solution in another thread:

On linux you simply enter “hostname” in the console

It would be handy if Duplicacy Web would show the Hostname somewhere if possible.

I came across something that is at least unexpected behavior, if not a bug. If I rename a schedule, all of the history for that schedule is lost in the “Activities” timeline in the dashboard.

When an access error occurs:

Running backup command from /root/.duplicacy-web/repositories/localhost/0 to back up test

Options: [-log backup -storage test -stats]

2019-01-23 01:14:57.360 INFO REPOSITORY_SET Repository set to test

2019-01-23 01:14:57.360 INFO STORAGE_SET Storage set to /storage

2019-01-23 01:14:57.370 INFO BACKUP_START No previous backup found

2019-01-23 01:14:57.370 INFO BACKUP_INDEXING Indexing /root/.duplicacy-web/repositories/localhost/0/test

2019-01-23 01:14:57.370 WARN LIST_FAILURE Failed to list subdirectory: open /root/.duplicacy-web/repositories/localhost/0/test: no such file or directory

2019-01-23 01:14:57.487 WARN SKIP_DIRECTORY Subdirectory cannot be listed

2019-01-23 01:14:57.491 INFO BACKUP_END Backup for /root/.duplicacy-web/repositories/localhost/0/test at revision 1 completed

2019-01-23 01:14:57.491 INFO BACKUP_STATS Files: 0 total, 0 bytes; 0 new, 0 bytes

2019-01-23 01:14:57.491 INFO BACKUP_STATS File chunks: 0 total, 0 bytes; 0 new, 0 bytes, 0 bytes uploaded

2019-01-23 01:14:57.491 INFO BACKUP_STATS Metadata chunks: 3 total, 8 bytes; 2 new, 6 bytes, 24 bytes uploaded

2019-01-23 01:14:57.491 INFO BACKUP_STATS All chunks: 3 total, 8 bytes; 2 new, 6 bytes, 24 bytes uploaded

2019-01-23 01:14:57.491 INFO BACKUP_STATS Total running time: 00:00:01

2019-01-23 01:14:57.491 WARN BACKUP_SKIPPED 1 directory was not included due to access errors

The UI marks the backup run as a success. Which is wrong. Because backup failed.

I have to disagree here. This is in line with what the CLI does, and this is what i would also expect:

2019-01-23 01:14:57.491 INFO BACKUP_END Backup for /root/.duplicacy-web/repositories/localhost/0/test at revision 1 completed <- as you can see from here the backup completed, which means this was a successful run.

It is true that that 1 directory (the only one to backup) was not listed hence 0 files were backed up, but still the backup itself was a success because no errors happened.

That’s the thing - the repository was selected and there were files in there, outside of .duplicacy-web. And yet, it failed to find something within its own .duplicacy-web folder and then failed to pick up those files. Nuking that backup object and creating a new one succeeded, so I’m not sure what was the issue there, and perhaps now it is too late and not sufficient data to triage it.

In other words, from the user perspective, who selected files, pressed backup, and software said “All good” when in reality nothing was done, and had I not clicked the link to look at the log file I would not have known.

I think any failure to pick up files shall be fatal. User selected that file, and if it is unreadable – something shall be done about that. At least a warning.

If these won’t be considered errors, could the UI be made to at least say that it “completed with warnings” (or something similar) – since that’s what Duplicacy considers these file I/O errors?

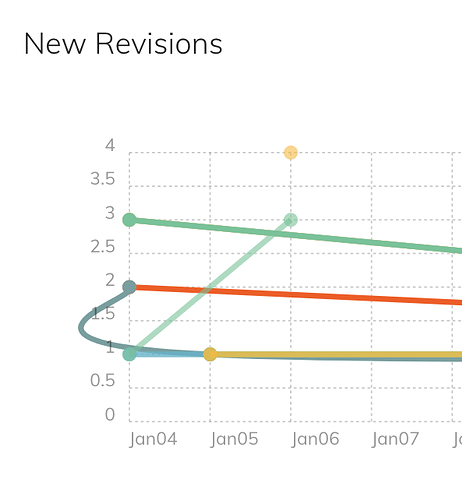

I don’t know if any more examples of wonky line smoothing are needed, but here’s another real-world one. Smoothed lines might look pretty for screenshots under certain scenarios, but they’re not accurate.

Hahah that’s so comical.

But yes, that particular chart needs bar graphs instead.

Not least because the first and most recent revision count, e.g. at the start of the day will be 0 or low, a line - curvy or straight - just doesn’t make sense. They’re supposed to be independent values anyway.

LOL, can’t stop laughing.  Made my day!

Made my day!

Security issue: Once I log in from one browser session, anybody can open another browser session and is not prompted to login again.

Also, there should be logout button.

Inconsistency:

Executable is called duplicacy_web

but the config directory is called ~/.duplicacy-web

During first launch the duplicacy_web seems to generate an identifying number and downloads a license for that machine:

Duplicacy Web Edition Beta 0.2.10 (5771BE)

Starting the web server at http://[::]:3875

2019/01/24 23:40:15 A new license has been downloaded for 093e2fd5198e

When running duplicacy in the docker container this ID changes after container is re-created, even though /etc/machine-id is preserved (manually restored).

Because container does not have an identity the specific instance of duplicacy should not use discardable container data.

Would it be possible to implement a way to control how duplicacy computes that identifying information or have an option to preserve it in configuration directory once computed? (in duplicacy.json, environment variable, any other way)?

Otherwise every time new container is created it will pull a new license and I’m not sure what will happen when user would actually need to activate the product.

No, that does not work (I actually keep entire ~/.duplicacy-web minus caches minus logs host side).

Because duplicacy detects that the host changed those licenses issues for previous host now become invalid and it downloads a new one.

I do preserve /etc/machine-id but that is not enough. Perhaps duplicacy uses Ethernet MAC address as well, I can try preserving that as well, but I don’t want to keep chasing it; it would be easier if we had supported solution.

On linux Duplicacy finds the license id in /var/lib/dbus/machine-id. You can symlink it to /etc/machine-id.

This does not seem to work.

If I don’t have /etc/machine-id the software complains that it cannot find that file. So, I’ve symlinks both, /etc/machine-id and /var/lib/dbus/machine-id to the same file, located outside, that is populated once from /var/lib/dbus/machine-id.

And yet, every time duplicacy_web downloads new license:

Here is what I do in a new container:

ln -s /config/ ~/.duplicacy-web

...

echo Machine-ID=$(cat /var/lib/dbus/machine-id)

echo Licenses=$(cat /config/licenses.json)

...

duplicacy_web &

wait

and this is what I get:

First invocation, when licensees.json does not exist:

Machine-ID=94eb0440e21288f27c81ea6b5c4bbc1f

cat: can't open '/config/licenses.json': No such file or directory

Licenses=

Logging tail of the log from this moment on

Starting duplicacy

Log directory set to /logs

2019/01/26 02:09:45 A new license has been downloaded for 9cc80824b422

Duplicacy CLI 2.1.2

Second invocation:

Machine-ID=94eb0440e21288f27c81ea6b5c4bbc1f

Licenses={ "9cc80824b422": { "license": "7b....0a", "signature": "1b46287434cb0b0a97eec729d410d1e354fa384294cf1d8498dff4c26fe58f894eb004593bf3ce10787261fa46990e9240a56a90af6d312ee96659fc345f23ec1e58cee2ab67f0b45677226264d5674fac281f619ca8457320406b64437851ee15c128d9d9b38dede074cc9442d17a9f000945bc7bdbdaa743fb819aa2ec950c39e6d20de7146f541ef32bc2272a423f787f738c99265f1267820ce0fbb5d72bc87432ebf411ba025e108adec80b34709d2b9f9da65ae2c37d90f745937a4b489d1a4f3992a7bf0e723a8c92b3a9e6e651edd11572723df4204a6c711b3d3d5be79388a34337898f0fb31713ae13c40893fc9a11c4411b63aecd649805e08343" } }

Logging tail of the log from this moment on

Starting duplicacy

Log directory set to /logs

Duplicacy CLI 2.1.2

Duplicacy Web Edition Beta 0.2.10 (5771BE)

Starting the web server at http://[::]:3875

2019/01/26 02:09:59 A new license has been downloaded for 130ff08991ba

Note, same machine-id and yet it downloads new license.

So, what else is being used to come up with the host id?

9cc80824b422 and 130ff08991ba are host names. When the host name changes Duplicacy will download a new license (both the machine id and the host name should match what is in the license).

The host name is read from /proc/sys/kernel/hostname, but I guess it should be the same as what the hostname command returns.

Ah! That totally makes sense. Docker creates hostnames that look like ids, that confused me.

So, this is what I’ve learned needs to be done to stabilize the license when changing the container (which is based on alpine linux):

- install dbus:

apk add dbus. - link

ln -s /var/lib/dbus/machine-id /etc/machine-id. - pass

--hostnameargument when launching container.

This result in correct behavior, docker seems to ensure that machine-id is generated consistently on the same host, no need to save/restore it.

Thank you for guidance!

What does “Match the requested format” mean when choosing a Backup ID?

When backing up to Wasabi, it worked perfectly on the first try, I chose “Companyname” as the backup ID. When backing up to SFTP, it seems the Backup ID is more sensitive. I keep getting:

Running backup command from /var/services/homes/admin/.duplicacy-web/repositories/localhost/1 to back up /volume1/Storage

Options: [-log backup -storage Synology_DS413j -stats]

2019-02-07 06:57:09.736 INFO REPOSITORY_SET Repository set to /volume1/Storage

2019-02-07 06:57:09.736 INFO STORAGE_SET Storage set to sftp://Backup@192.168.2.17/Backup

2019-02-07 06:57:11.341 ERROR SNAPSHOT_LIST Failed to list the revisions of the snapshot CompanyName: failed to send packet header: EOF

But when I choose a short one-character name for the Backup ID, like “a” or “c”, it works immediately. I’ve tried 20 different combinations, and that’s the only factor that makes it work every time. Here’s one I cancelled after a few seconds once I saw it was working:

Running backup command from /var/services/homes/admin/.duplicacy-web/repositories/localhost/14 to back up /volume1/Storage

Options: [-log backup -storage ds413j -stats]

2019-02-07 08:32:21.573 INFO REPOSITORY_SET Repository set to /volume1/Storage

2019-02-07 08:32:21.573 INFO STORAGE_SET Storage set to sftp://Backup@192.168.2.17/Backup

2019-02-07 08:32:23.170 INFO BACKUP_START No previous backup found

2019-02-07 08:32:23.170 INFO BACKUP_INDEXING Indexing /volume1/Storage

The Backup ID seems to matter a lot for SFTP, but not for Wasabi. Can we have some guidance on this?

Thanks!