This gives me nothing, probably because I changed the name of the executable file, so I tried

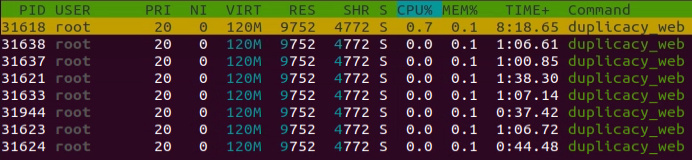

pidof ./duplicacy_web but it also gave me nothing. So I tried to get the PID via htop but which of these is it?

Probably it doesn’t matter for this purpose, so I tried the first one:

$ cat /proc/31618/limits

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 30166 30166 processes

Max open files 1024 4096 files

Max locked memory 16777216 16777216 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 30166 30166 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

$

So does this mean that I’m not running against the system limit of open files but against the max of 4096 open files that duplicacy is allowed?

I’m somewhat puzzled why I’m running against any limit here. Whose fault is this? (I’m not meaning to blame anyone but trying to understand what’s going on.) I’m inclined to believe that this is at least also a problem of duplicacy.

The command by itself just gives me a long list that I don’t know what to do with. So I found a more sophisticated version here and it gave me this:

sudo lsof | awk '{ print $2 " " $1; }' | sort -rn | uniq -c | sort -rn | head -20

lsof: WARNING: can't stat() fuse.gvfsd-fuse file system /run/user/1000/gvfs

Output information may be incomplete.

14144 22659 EmbyServe

9455 21273 java

7392 20256 nxnode.bi

5220 17311 mozStorag

4640 17311 FS\x20Bro

4179 30448 java

3791 2874 python2

2862 2519 libvirtd

2675 6466 nxnode.bi

2232 20521 JS\x20Hel

1992 20361 nxclient.

1740 17311 DOM\x20Wo

1740 17311 DNS\x20Re

1160 17311 threaded-

1160 17311 StreamT~s

1160 17311 JS\x20Hel

1160 17311 firefox

1160 17311 AudioIPC

804 19368 virt-mana

744 20521 llvmpipe-

How should I proceed?