That sounds like your file descriptor limit is too low. What does ulimit -a say?

This is not something I (consciously) changed so this should be the ubuntu default settings:

$ ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 30166

max locked memory (kbytes, -l) 16384

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 30166

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

I would suggest raising the hard limit for the process. Then you can double-check the limits with:

pidof ./duplicacy_web_linux_x64_1.1.0

cat /proc/\<pid you just got\>/limits

On my desktop system the default hard limit set by systemd is 524288, but you may prefer to use a lower number. Or the nofile limit can be set by golang so that users don’t have to do this, but I’m not familiar with golang.

Run lsof to find out which process opens the most files.

This gives me nothing, probably because I changed the name of the executable file, so I tried

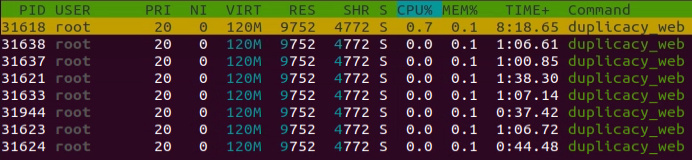

pidof ./duplicacy_web but it also gave me nothing. So I tried to get the PID via htop but which of these is it?

Probably it doesn’t matter for this purpose, so I tried the first one:

$ cat /proc/31618/limits

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 30166 30166 processes

Max open files 1024 4096 files

Max locked memory 16777216 16777216 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 30166 30166 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

$

So does this mean that I’m not running against the system limit of open files but against the max of 4096 open files that duplicacy is allowed?

I’m somewhat puzzled why I’m running against any limit here. Whose fault is this? (I’m not meaning to blame anyone but trying to understand what’s going on.) I’m inclined to believe that this is at least also a problem of duplicacy.

The command by itself just gives me a long list that I don’t know what to do with. So I found a more sophisticated version here and it gave me this:

sudo lsof | awk '{ print $2 " " $1; }' | sort -rn | uniq -c | sort -rn | head -20

lsof: WARNING: can't stat() fuse.gvfsd-fuse file system /run/user/1000/gvfs

Output information may be incomplete.

14144 22659 EmbyServe

9455 21273 java

7392 20256 nxnode.bi

5220 17311 mozStorag

4640 17311 FS\x20Bro

4179 30448 java

3791 2874 python2

2862 2519 libvirtd

2675 6466 nxnode.bi

2232 20521 JS\x20Hel

1992 20361 nxclient.

1740 17311 DOM\x20Wo

1740 17311 DNS\x20Re

1160 17311 threaded-

1160 17311 StreamT~s

1160 17311 JS\x20Hel

1160 17311 firefox

1160 17311 AudioIPC

804 19368 virt-mana

744 20521 llvmpipe-

How should I proceed?

If I had to guess, the limit is being hit by the duplicacy web server (low ulimit is a common issue with web servers). Try the following to temporarily increase the limits to see if it fixes the problem. Run the following as root, then restart duplicacy web:

ulimit -n 94000 # number is arbitrary

You can also try:

prlimit --pid pid # get the pid of the parent process

prlimit --pid pid --nofile=1024:94000 # set the pid of the parent processIt is the duplicacy CLI that complained about the error so you should find the pid of the CLI.

Run this pgrep command while a job is running (I think you were running a check job?):

pgrep duplicacy

You’ll see two pids. The second one is the pid of the CLI.

Then run lsof -p <pid> which will show files opened by the CLI.

So are you saying that I should not touch the ulimit for the webserver?

I only saw one. So I figured I should do it while my check-job is running and indeed, it gave me two this time. But it was the first one that was the pid of the CLI.

$ sudo lsof -p 20503

lsof: WARNING: can't stat() fuse.gvfsd-fuse file system /run/user/1000/gvfs

Output information may be incomplete.

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

duplicacy 20503 root cwd DIR 0,49 3 389369 /srv/NAS/duplicacy/duplicacy-web/repositories/localhost/all

duplicacy 20503 root rtd DIR 8,2 4096 2 /

duplicacy 20503 root txt REG 8,2 26806950 524332 /.duplicacy-web/bin/duplicacy_linux_x64_2.3.0

duplicacy 20503 root 0r CHR 1,3 0t0 6 /dev/null

duplicacy 20503 root 1w FIFO 0,12 0t0 40321032 pipe

duplicacy 20503 root 2w CHR 1,3 0t0 6 /dev/null

duplicacy 20503 root 3u sock 0,9 0t0 40320865 protocol: TCP

duplicacy 20503 root 4u a_inode 0,13 0 10602 [eventpoll]

duplicacy 20503 root 5u sock 0,9 0t0 40320870 protocol: TCP

duplicacy 20503 root 6u IPv4 40321058 0t0 TCP server:38002->api13.pcloud.com:https (ESTABLISHED)

$

So what does this tell me? Doesn’t look like the CLI has an awful lot of files open…

I saw in your other thread you are using systemd. The most straight forward option is just to put this in your service file:

[Service]

LimitNOFILE=94000 # change as you see fitI read your first post again and realized that it was just a warning, not a failure. That chunk couldn’t be saved in the local cache and the only side effect was that it would be downloaded again in case it is needed later.

In addition, it looks like a samba mount so it must be samba that opened too many files at a time when there were many chunks to be saved to the local cache. You’re less likely to see this warning again after most chunks are already in the local cache.

But, the temporary directory (repositories) should not be on a samba mount to begin with, because the cache is supposed to be local.

I don’t know linux so well, but I don’t think this is a samba mount. What makes you think that?

Here is how my server is configured:

- Ubuntu is running on a small SSD and

- the main storage (a ZFS pool) is mounted as /srv/NAS/ (the

NASdirectory is really not needed, it could just as well be directly in/srvbut I wasn’t sure whether/srvis used for anything else so I added another level, just to be sure). - /srv/NAS/ contains a number of directories such as

/srv/NAS/christophthat are also made available SMB shares, but/srv/NAS/duplicacy/is not. (And even if it were, would it really matter since from the perspective of duplicacy, it is still a local directory and I wouldn’t know how it would know about it also being a samba share, no?) The reason why it is there is because that’s where basically all my free disk space is.

So is any of this a problem for duplicacy?

Sorry I thought it was a samba or NFS drive from the name. So it is the CLI that opened too many files. I don’t know why it would open more than 1024 files. Increasing the ulimit should definitely help but because it is a harmless warning I would hold off unless it happens again.

It didn’t take so long until it happened again. Not exactly the same, but “too manu open files” is part of it:

2020-02-14 02:40:33.867 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot PC_C

2020-02-14 02:40:35.002 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:40:35.930 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:40:37.330 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:40:40.022 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:40:47.109 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:40:57.148 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:41:23.622 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:42:27.121 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/PC_C/1' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/PC_C/1: dial tcp: lookup webdav.pcloud.com on 127.0.0.53:53: dial udp 127.0.0.53:53: socket: too many open files)

2020-02-14 02:44:11.152 ERROR SNAPSHOT_INFO Failed to get the information on the snapshot PC_C at revision 1: Maximum backoff reached

Failed to get the information on the snapshot PC_C at revision 1: Maximum backoff reached

This is a strange combination of another error I have been seeing and the one reported in the OP.

Adding to all the strangeness in the other error (that the DNS lookup suddenly fails after the remote host has been contacted only minutes earlier), this one is even stranger the problem with the lookup suddenly is too many open files instead of no such host.

@gchen, could you explain how duplicacy constructs these error messages? I’m guessing that it is somehow passing on error messages that it gets from somewhere else?

This explained why the CLI opened so many files – each udp socket is a file descriptor too, and the Go resolver used too many sockets since the DNS resolver was down. So if you can figure out why the DNS resolver was down you won’t see this “too may files” error.

I love it when completely weird situations (see also this topic) suddenly start to make sense

Could this be the solution:

Unfortunately, this “too many open files” error keeps plaguing me even though I have set up a new server with a different operating system (Debian/ OpenMediaVault):

2020-07-20 04:23:08.749 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot NAS

2020-07-20 04:29:39.534 WARN DOWNLOAD_FILE_CACHE Failed to add the file snapshots/NAS/349 to the snapshot cache: open /.duplicacy-web/repositories/localhost/all/.duplicacy/cache/pcloud/snapshots/NAS/349.iorybnta.tmp: too many open files

2020-07-20 04:29:39.678 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:29:40.232 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:29:41.243 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:29:43.476 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:29:50.732 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:30:03.246 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:30:35.225 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:31:16.510 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/350' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/350: dial tcp: lookup webdav.pcloud.com on 1.1.1.1:53: dial udp 1.1.1.1:53: socket: too many open files)

2020-07-20 04:33:11.195 ERROR SNAPSHOT_INFO Failed to get the information on the snapshot NAS at revision 350: Maximum backoff reached

Failed to get the information on the snapshot NAS at revision 350: Maximum backoff reached

So I’m not sure anymore about that bug that I mentioned above. It is being discussed by the ubuntu people and although ubuntu is derived from Debian, I’m not sure I can assume that the same problem exists on my Debian server. And if it does, I’m not sure if I can just disable systemd-resolved as suggested here.

Any suggestions how I can finally get duplicacy web to work on my server?

2020-07-20 04:29:39.534 WARN DOWNLOAD_FILE_CACHE Failed to add the file snapshots/NAS/349 to the snapshot cache: open /.duplicacy-web/repositories/localhost/all/.duplicacy/cache/pcloud/snapshots/NAS/349.iorybnta.tmp: too many open files

This means it already ran out of file descriptors before the DNS resolver was trying to resolve webdav.pcloud.com. So it was not the DNS resolver’s fault – it just didn’t have a free file descriptor to open a socket.

What if you move the temporary directory from /.duplicacy-web/repositories/ to something under /tmp, like /tmp/duplicacy/repositories?

Indeed, after changing to /tmp/, I seem to be no longer getting “too many open files”. How can changing the path have this effect?

But the job nevertheless fails. Now like this:

2020-07-25 01:57:23.583 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot SERVER

2020-07-25 02:01:31.686 INFO WEBDAV_RETRY URL request 'MKCOL snapshots/SERVER' returned status code 401

2020-07-25 02:01:33.314 INFO WEBDAV_RETRY URL request 'PROPFIND snapshots/SERVER/204' returned status code 401

2020-07-25 02:02:12.717 INFO WEBDAV_RETRY URL request 'MKCOL snapshots/SERVER' returned status code 401

2020-07-25 02:02:14.662 INFO WEBDAV_RETRY URL request 'GET snapshots/SERVER/238' returned status code 401

2020-07-25 02:02:59.632 INFO WEBDAV_RETRY URL request 'MKCOL snapshots/SERVER' returned status code 401

2020-07-25 02:09:01.534 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot ALPHA_C

2020-07-25 02:15:02.943 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot NAS

2020-07-25 02:18:22.649 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:18:23.196 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:18:24.260 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:18:27.188 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:18:31.328 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:18:43.355 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:19:08.521 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:19:47.403 TRACE WEBDAV_RETRY URL request 'PROPFIND snapshots/NAS/159' returned an error (Propfind https://webdav.pcloud.com/Backup/Duplicacy/snapshots/NAS/159: dial tcp: lookup webdav.pcloud.com on 208.67.222.2:53: no such host)

2020-07-25 02:21:10.069 ERROR SNAPSHOT_INFO Failed to get the information on the snapshot NAS at revision 159: Maximum backoff reached

Failed to get the information on the snapshot NAS at revision 159: Maximum backoff reached

What does this mean?

Your DNS resolver running on 208.67.222.2:53 is down.

Not sure what’s going on there. 208.67.222.2 is from OpenDNS and it is my secondary DNS, the primary one being 1.1.1.1 (cloudflare). No idea why it’s not using cloudflare. But I guess, I should get rid of the openDNS server.

Edit:

Plus, what I understand even less: why does duplicacy need to look up webdav.pcloud.com mid way through the process, after it has been downloading data from that same url for an hour or so? Doesn’t it remember the IP?