Based on the discussion in the other thread (The "check" command is so slow that it can never finish. Is there anything that can be done to improve the speed of "check"? - #10 by Droolio) where I was talking about check being slow, I am now trying to follow the suggestion of running prune to make check faster.

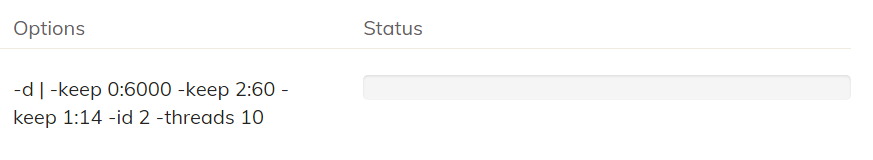

But prune seems even slower than check, and while check at least has a progress bar, prune just shows me this, no matter how long I wait:

At first I tried running it with -all, but now I’m trying to run it with -id 2 in the hope that that’s faster. And based on the log it is faster, but still takes infinitely long with no way for me to know how long it will actually take, as there’s no indicator for what the progress is even with -d in the log.

This is the relevant parts of the log:

Running prune command from C:\ProgramData/.duplicacy-web/repositories/localhost/all

Options: [-log -d prune -storage BackupGoogle -keep 0:6000 -keep 2:60 -keep 1:14 -id 2 -threads 10]

2022-10-05 22:09:49.363 INFO STORAGE_SET Storage set to gcd://Duplicacy

2022-10-05 22:09:49.386 DEBUG PASSWORD_ENV_VAR Reading the environment variable DUPLICACY_BACKUPGOOGLE_GCD_TOKEN

2022-10-05 22:09:51.604 DEBUG PASSWORD_ENV_VAR Reading the environment variable DUPLICACY_BACKUPGOOGLE_PASSWORD

2022-10-05 22:09:52.898 TRACE CONFIG_ITERATIONS Using 16384 iterations for key derivation

2022-10-05 22:09:53.149 DEBUG STORAGE_NESTING Chunk read levels: [1], write level: 1

2022-10-05 22:09:53.150 INFO CONFIG_INFO Compression level: 100

2022-10-05 22:09:53.150 INFO CONFIG_INFO Average chunk size: 4194304

2022-10-05 22:09:53.150 INFO CONFIG_INFO Maximum chunk size: 16777216

2022-10-05 22:09:53.150 INFO CONFIG_INFO Minimum chunk size: 1048576

2022-10-05 22:09:53.150 INFO CONFIG_INFO Chunk seed: c92876c834715f71fd9bf48a8f49efb1cd30a0a146239c00be9064d1d444135f

2022-10-05 22:09:53.150 TRACE CONFIG_INFO Hash key: 4c3d9cb7dfba828fa36c6cadb4d058a6d2b736b94eb0180bcc95259b066c5ac4

2022-10-05 22:09:53.150 TRACE CONFIG_INFO ID key: e929621736c330c716551f9b3f5c639fe93cdc05579927ef7485e409904ebccb

2022-10-05 22:09:53.150 TRACE CONFIG_INFO File chunks are encrypted

2022-10-05 22:09:53.150 TRACE CONFIG_INFO Metadata chunks are encrypted

2022-10-05 22:09:53.150 DEBUG DELETE_PARAMETERS id: 2, revisions: [], tags: [], retentions: [0:6000 2:60 1:14], exhaustive: false, exclusive: false, dryrun: false, deleteOnly: false, collectOnly: false

2022-10-05 22:09:53.151 INFO RETENTION_POLICY Keep no snapshots older than 6000 days

2022-10-05 22:09:53.151 INFO RETENTION_POLICY Keep 1 snapshot every 2 day(s) if older than 60 day(s)

2022-10-05 22:09:53.151 INFO RETENTION_POLICY Keep 1 snapshot every 1 day(s) if older than 14 day(s)

2022-10-05 22:09:53.151 TRACE SNAPSHOT_LIST_IDS Listing all snapshot ids

2022-10-05 22:09:53.575 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot 4

2022-10-05 22:13:08.432 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/4/1 from the snapshot cache

[a lot more of those, going up revisions]

2022-10-05 22:56:54.863 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/4/7915 from the snapshot cache

2022-10-05 22:56:54.863 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot 3

2022-10-05 22:58:50.616 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/3/1 from the snapshot cache

[a lot more of those, going up revisions]

2022-10-06 01:05:32.682 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/1/12594 from the snapshot cache

2022-10-06 01:05:34.290 DEBUG DOWNLOAD_FILE Downloaded file snapshots/1/12595

2022-10-06 01:05:34.292 DEBUG SNAPSHOT_DELETE Snapshot 2 at revision 3 to be deleted - older than 60 days, less than 2 days from previous

[a lot more of those, going up revisions]

2022-10-06 01:05:34.343 DEBUG SNAPSHOT_DELETE Snapshot 2 at revision 8639 to be deleted - older than 14 days, less than 1 days from previous

2022-10-06 01:05:34.343 INFO SNAPSHOT_DELETE Deleting snapshot 2 at revision 3

2022-10-06 01:05:34.359 DEBUG CHUNK_CACHE Chunk 5385423b401884c644ec49823732d168fc5e373b1b3b4ff8509f5a6e72661a86 has been loaded from the snapshot cache

2022-10-06 01:05:34.528 INFO SNAPSHOT_DELETE Deleting snapshot 2 at revision 4

[a lot more of those, going up revisions]

2022-10-06 01:29:49.252 DEBUG DOWNLOAD_FETCH Fetching chunk 39367b61cf9614eed24935da307fa6e29298f4f929d41830b3d97a49d4de2177

2022-10-06 01:29:49.254 DEBUG CHUNK_CACHE Chunk 39367b61cf9614eed24935da307fa6e29298f4f929d41830b3d97a49d4de2177 has been loaded from the snapshot cache

2022-10-06 01:29:49.467 INFO SNAPSHOT_DELETE Deleting snapshot 2 at revision 8639

2022-10-06 01:29:49.467 DEBUG DOWNLOAD_FETCH Fetching chunk 59999e7fd7629d3142415dfe1ae8b395f4f039898b2558b26ba5aafc3cdc5d40

2022-10-06 01:29:49.473 DEBUG CHUNK_CACHE Chunk 59999e7fd7629d3142415dfe1ae8b395f4f039898b2558b26ba5aafc3cdc5d40 has been loaded from the snapshot cache

2022-10-06 01:29:49.474 DEBUG DOWNLOAD_FETCH Fetching chunk 39367b61cf9614eed24935da307fa6e29298f4f929d41830b3d97a49d4de2177

2022-10-06 01:29:49.476 DEBUG CHUNK_CACHE Chunk 39367b61cf9614eed24935da307fa6e29298f4f929d41830b3d97a49d4de2177 has been loaded from the snapshot cache

2022-10-06 01:29:49.637 DEBUG DOWNLOAD_FETCH Fetching chunk cd26258898b4ccaad4e619c14dfb3bcacfedc3c1589bae9737bb0764d523ee4b

2022-10-06 01:29:49.651 DEBUG CHUNK_CACHE Chunk cd26258898b4ccaad4e619c14dfb3bcacfedc3c1589bae9737bb0764d523ee4b has been loaded from the snapshot cache

2022-10-06 01:29:49.652 DEBUG DOWNLOAD_FETCH Fetching chunk 21b7e146caa53407e977513da485c429b953dbcc5d0bbe478584db232fa33292

2022-10-06 01:29:49.683 DEBUG CHUNK_CACHE Chunk 21b7e146caa53407e977513da485c429b953dbcc5d0bbe478584db232fa33292 has been loaded from the snapshot cache

2022-10-06 01:29:49.685 DEBUG DOWNLOAD_FETCH Fetching chunk 7e00420080dd8fc76f0d8c0bbf29d922996dc6c97698e9efe71e3bca13cc3d6b

2022-10-06 01:29:49.691 DEBUG CHUNK_CACHE Chunk 7e00420080dd8fc76f0d8c0bbf29d922996dc6c97698e9efe71e3bca13cc3d6b has been loaded from the snapshot cache

2022-10-06 01:29:49.703 DEBUG DOWNLOAD_FETCH Fetching chunk dbfd1e444503131daf343647fe6d3514febbbccbee2728de404501628f68c1ba

2022-10-06 01:29:49.710 DEBUG CHUNK_CACHE Chunk dbfd1e444503131daf343647fe6d3514febbbccbee2728de404501628f68c1ba has been loaded from the snapshot cache

[and here now begins the real problem in speed, this goes on forever]

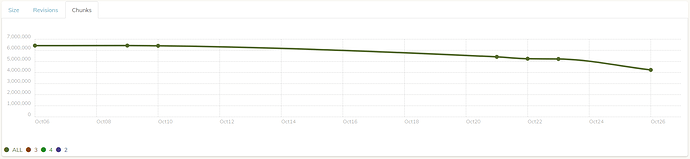

So at the beginning, there is a reasonably fine progress indicator in the log as it always counts up revisions, does something at the revision, like fetching a chunk, and then repeats the same for the next revision, etc, until it reached the highest number revision. But after 3.5 hours, it’s finished with that and no longer counts up revisions, and instead just infinitely repeats Fetching chunk and chunk has been loaded from the snapshot cache.

What is it actually doing there? Looking at the task manager at what Duplicacy is doing, I see that it’s using around ~6% CPU usage which equals ~2 threads on my 3950X, and somewhere between 0.1 MB/s and 4 MB/s in disk usage, and 0 MB/s network usage. So it seems whatever it’s doing here is fully local, not involving downloading or uploading anything on the network, just looking at local files. But why is it so extremely slow then? The cache folder is on my NVMe SSD that can do around 7000 MB/s, so I don’t understand why duplicacy is working on this at a snails pace.

)…

)…