Interesting, thanks!

Yes, if this is acceptable to you – no reason not to continue using it, with the understanding that nothing unlimited lasts forever, eventually google may figure out how to fix quotas, and close this loophole. When and if this will happen – is anybody’s guess, but until it lasts I don’t see the reason not to use it; if drawbacks are acceptable.

I’m not sure why you call it a “loophole”? I am using the “Google Workspace Enterprise Standard” plan. With that plan you officially get unlimited google drive space for $20/user/month, it’s not a “loophole”. I’m sure Google wouldn’t offer it if they wouldn’t make a profit on average from it. Though to be fair, the option to select that plan is quite hidden in their UI.

- your backup manages to finish fast enough to keep up with new data being generated

Backup is really perfectly fast. I have “backup” set to hourly, and the “backup” job for my 2 TB C Drive for example needs a total of 10 minutes to run when it doesn’t find any changed data. So I can nicely run it hourly. And when it does find changed data, I see that it’s uploading with near 50 MBit’s, so it’s limited by my upload speed and not by anything else.

- Check does not need to be fast – it can run in the background, launched from say a cloud instance, if you don’t want to keep your PC running all the time

How would I do that? How can I run “check” from a “cloud instance”? It does not need access to the local files?

IIRC this is what provided best performance and avoided “backing off” messages in the log (your an add -d to the global flags to get more verbose logging and see what’s going on there).

Interesting, I’ll certainly add -d then. So until I see any “backing off” messages I can increase the thread count for more speed? Does the -threads option actually have any effect on “check”?

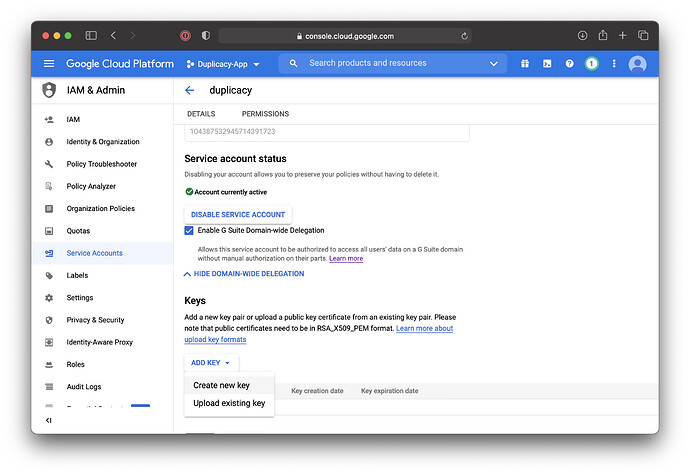

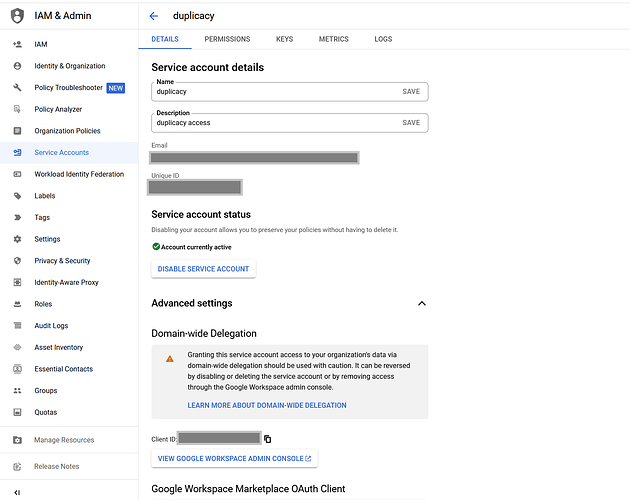

If you have created a token from duplicacy.com/gcd_start then you are using the shared project. Open the token file in the text editor and see if there is duplicacy.com/gcd_refresh mentioned anywhere.

Is that token file stored in any duplicacy folder? If so, I can’t find it. Where would that be?

But in all fairness, if it works for you as is – I would just continue using it. Schedule check if you want to in a separate job so it does not block the ongoing backup. Duplicacy is fully concurrent and this approach shall work fine.

I have always been running all my backups and check in parallel, yes.