No, they never use the word “unlimited”. They specifically say " As much storage as you need*" with an asterisk that it’s 5TB of pooled storage per user, but enterprise customers have an option to request more, on an as-needed basis. The loophole is in that those quotas have never been enforced, and de facto, even on $12/month Business Standard plan you can upload unlimited amount of data. When will they close this bug – nobody knows but them.

In other words, you are not paying $20 for unlimited storage; you are paying for enterprise SaaS features, endpoint management, data vaults, etc, that you may or may not use, and along with that get some amount of storage; it’s not the main product, it’s just incidental to all the other features and is pretty much given away for free. You happen to get unlimited storage as long as google continues to neglect enforcing quotas, for whatever reasons they have for doing so.

I don’t work for google, and don’t know details on why are they not enforcing published quotas; regardless, this is a very weak argument: plenty of companies who offered unlimited data on various services ceased to do so. Amazon Drive comes to mind as one of the recent departures.

It’s not really hidden – it’s along the rest of plans in the dashboard.

Check only checks that the chunks snapshot files refer to exist in the storage. IF you add -chunks argument, it will also download chunks and verify their integrity. You can setup duplicacy on a free oracle cloud instance and have it run check periodically. I would not do that, I’m of opinion that storage must be trusted, and it’s not a job of a backup program to worry about data integrity – but it’s a possibility.

Yes, but do you need more speed? If anything, you’d want to slow down the backup to avoid surges in usage. As long as daily new data gets eventually uploaded, speeding up backup buys you nothing. I was running duplicacy under CPULimit-er in fact, because I did not want it to run fast.

Look in C:\ProgramData/.duplicacy-web/repositories/localhost/0/.duplicacy/preferences file. It will refer to the token file.

Awesome. THat’s the backoff thing I was referring to.

I don’t have an answer to that… I’ve been using it with 1 thread. But I’d expect there to be some per-user limits on a number of concurrent connections. The downside is that at some point managing a Buch of threads will be more overhead than benefit. But you can play with it, see how it work.

Yes, duplicacy does not care how it accesses files as long as it does.

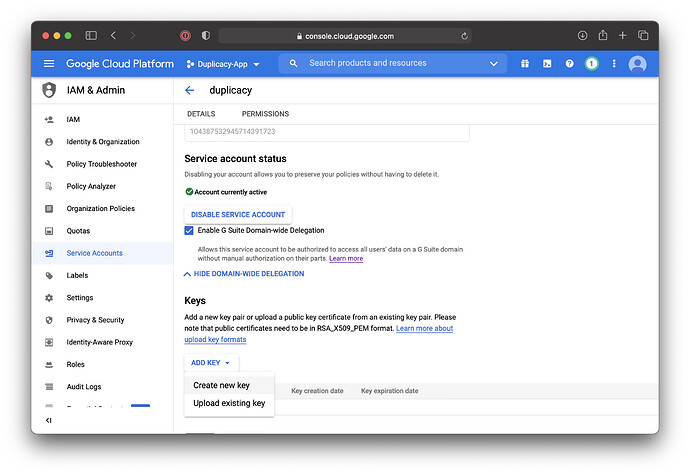

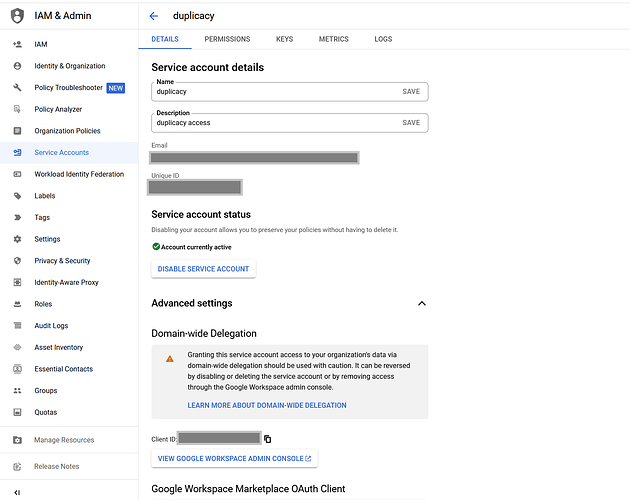

Duplicacy saves a path to a token file (even though it would have been more logical to save the token content". You can just replace that file with something else. Or just delete the storage, add then a new one this time using your new token. If you give the storage the same name you won’ need to redo schedules, they will continue working. It will be the easiest way to do that than thyring to swipe the carpet from under it. Especially, since you use WebGUI, actual path to the token file is encrypted in duplicacy_web.json, and the .duplicacy/preferences files are generated from that. So your pretty much only way is to delete the storage from duplicacy_web and then add it back with the same name.