This clause (which reads “with more storage available at Google’s discretion upon reasonable request to Google“) is to prevent some dude with 15PB or data from abusing the service. And what exactly reasonable request is? “— More data please! — here you go!” ?

Anyway, this all does not matter. Today there is no cap. Once they start enforcing caps (they haven’t done it on 1TB g-suite accounts and instead they dropped price) — then we can look for alternatives.

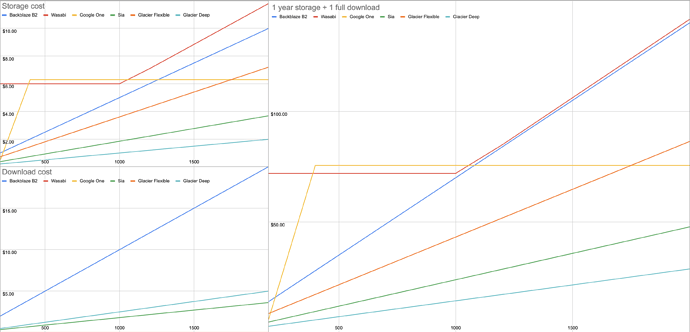

This is indeed storage cost only. There is also traffic charges. And api cost. It gets very very expensive even just to backup unless you optimize for glacier specifically.

And then Backup that you don’t plan to restore is called archive. And I’m sure there are archival tools that already include all those optimizations. But they are not backup tools.

It’s so cheap precisely because it’s unusable for anything else. Backup tools must be extremely simple, minimal, and easy to understand to be trusted. Building complex contraptions just to use wrong service for the job would be highly counterproductive and instantly negate any perceived cost savings at the first restore.

Maybe accrosync can come up with archiving tools later. But that’s entire different industry.

Anyway, this is all to say that in my opinion:

- archival storage shall not be supported.

- google is the best solution today for people with 2+TB of data — no reason not to use it.

- the very best approach would be to pay for actual commercial cloud storage — Google Cloud Storage, B2, S3, Azure, etc. it will be more expensive but that’s what it costs. Storing data is expensive. Still cheaper than nas on-premise. Backup of your data is not a place for compromises.