Thanks very much for the new web-based gui. I’ve been trying it out on two computers, mostly very successfully, and it is a much nicer experience than the previous gui. I just want to report a few issues, one seems significant, the others are minor. All testing has been on Win10 in Firefox.

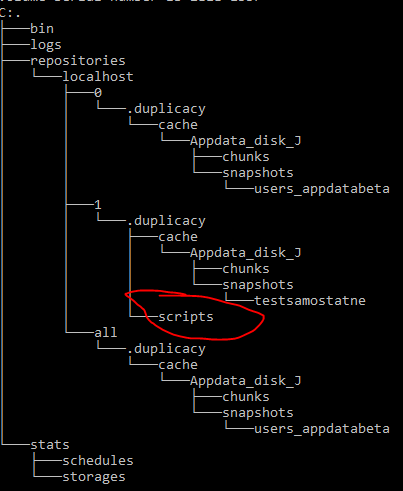

First the significant bug. I have a directory, D:/user, that has 5 subdirectories (a/, b/, c/, d/, and e/) that I want to back up on different schedules. So I set up three backup jobs:

- D:/user/ with filters -c/ -d/ -e/

- D:/user/c/ with filter -*.lrdata/

- D:/user/ with filters -a/ -b/ -c/

When I ran backup 2, it used the filters specified for backup 0, not 2, and when I ran backup 1 it seemed to ignore the filter. When I looked into the files in ~/.duplicacy-web/, I see that filters/localhost/0, filters/localhost/1, and filters/localhost/2 are different and correct. However, the files repositories/localhost/*/.duplicacy/filters are all the same, with the entries for backup 0. The result seems to be that the gui shows the different set of filters, but the cli is called with the wrong filters for backups 1 and 2. Obviously I can fix it locally, but it seems like something to try to fix. On the other PC, I used the same filters for backups 0 and 1, and no filters for a third backup, and there were no problems.

Setting the starting time for a schedule is a little quirky. I couldn’t use the arrows, and had to type in a time in the exact format used. For example, 4:00pm (no leading zero) didn’t work, 16:00 and 04:00 pm also fail. Maybe some off the shelf jscript time chooser would help. Also, once I realized that setting the starting time sets the first time each day that the job will run, I thought it could also be useful to have an ending time so that you could run a backup regularly only during work hours, for example.

My cloud backups go to a single B2 bucket to allow deduplication, and that means I have 5 backups, plus “all”, displayed on the storage graph for that B2 bucket. It seems like there is no color specification working for the 6th line on the graph. The sixth line is the same color as the first (All), and the sixth dot and label in the legend below the plot are black. The color spec for the sixth legend dot in the html is color:Zgotmp1Z, and for the text in the legend is rgba(104, 179, 200, 0.8). In the plot, the sixth line and dot have the same rgba color spec, which has the same rgb values as the first dot and line, which are specified as #68b3c8.

Finally, on the longer term, I want to support the previous request to be able to restore by finding the file to be restored and then choosing the version to restore.